Functionalism is a group of theories that build off of behaviorism and identity theory in various ways. So the introduction and motivation for functionalism will often take the form, “Remember when we were talking about behaviorism/identity theory, we said… Now functionalists respond by …”

Here are two reminders to start us off.

Remember when we were talking about identity theory, we said that a given mental state, like pain, might turn out to be correlated with different brain processes in different creatures. One response to that is to introduce different notions, like “pain-in-humans” and “pain-in-squid” and so on — and to treat these as identical to different brain processes — but to reject talk of “pain-in-general.” A shortcoming of that response is we have the intuition that pain is a single kind of mental state that we might share with squid and other creatures. A new, different response is to say that there is a property of “pain-in-general,” it’s just that this property is realized or implemented in different ways in different creatures — in something like the way that dispositions like fragility can have different categorical bases in different objects. As we’ll see, this kind of move plays a large role in functionalist theories.

Remember when we were talking about behaviorism, we said that typically there won’t be any one-to-one correlations between mental states and behavior. How one behaves depends on many mental states one has in combination. Usually there’s no distinctive behavior associated with a single mental state in isolation. If you’re in pain, exactly how you’ll behave depends on how much sympathy you want, how important you think it is to be stoic, whether you’re distracted thinking about something else, and so on. If you have beliefs about Charlotte, how you respond to my geography questions will depend on whether you want to be helpful or playful or deceptive, whether you understand the language I’m speaking in, and so on.

This raised a threat of regress or circularity: it looked like it might be impossible for the behaviorist to define any one mental state in terms of behavior, until they have already defined lots of other mental states, first. To define pain, they’d have to first define wanting sympathy, thinking stoicism is important, being distracted, and so on. To define each of those, they’d have to define yet other mental states, and so on.

As we’ll see, working around this threat also plays a large role in functionalism.

Unlike the the behaviorist, the functionalist wants to side with the identity theorist in thinking that mental states are real internal states that cause our behavior. But functionalists will want to retain the behaviorist’s idea that there are close conceptual connections between our mental states and the kinds of behavior they usually generate. So they will also have to wrestle with this threat of regress or circularity. How can they describe the connections between any one mental state and behavior, until they have already defined lots of other mental states?

I illustrated point 2 in class with the “bolt and anchor” systems. (“Bolts” here could also be called “pins” or “rivets” or “hooks.”)

It’s natural to count these all as bolt-and-anchors. But it’s challenging to define what makes something a bolt, and what makes something else an anchor. If I just describe the specific mechanical properties of one of the anchors, that can leave it mysterious why it doesn’t work with arbitrarily chosen other bolts (for example, you can’t combine a velcro anchor with a metal bolt). But if in explaining what an anchor is, you start to talk about the bolt it’s paired with, then it looks like you have to define bolts before you can explain what anchors are. But then you’ll have the reverse problem when you try to define bolts…

There are two techniques the functionalist uses to get around this threat of regress or circularity: one in terms of formal automata (machine tables, Turing Machines, and so on), and the other in terms of the Ramsey/Lewis method for defining terms. Both techniques involve defining a whole system of concepts as a single package (so we’d define bolts and anchors at the same time). Both techniques also allow for the defined items to be constructed in various mechanical ways, so these also address point 1 above. We’ll talk about machine tables today and the Ramsey/Lewis method a bit later.

In class, we thought about a problem where we had to design a rudimentary Coke machine. Coke costs 15¢, and the machine only accepts nickels or dimes. We have to decide what to do if more than 15¢ is deposited into the machine. One option would be to dispense change along with a Coke; but we decided instead to keep the extra money and apply it as credit towards the next purchase.

Here is the design we came up with:

The Coke machine has three states: ZERO, FIVE, TEN. If a nickel is inserted and the machine is in state ZERO, it goes into state FIVE and waits for more input. If a nickel is inserted and the machine is already in state FIVE, it goes into state TEN and waits for more input. If a nickel is inserted and the machine is already in state TEN, then the machine dispenses a Coke and goes into state ZERO. If a dime is inserted and the machine is in state ZERO, it goes into state TEN and waits for more input. If a dime is inserted and the machine is already in state FIVE, then it dispenses a Coke and goes into state ZERO. If a dime is inserted and the machine is already in state TEN, then it dispenses a Coke and goes into state FIVE.

Notice how the system’s response to input depends on what internal state the system is already in, when it received the input. When you put a nickel in, what internal state the Coke machine goes into depends on what its internal state was when you inserted the nickel.

Our Coke machine’s behavior can be specified as a table, which tells us, for each combination of internal state and input, what new internal state the Coke machine should go into, and what output it should produce:

| Input | If in state ZERO | If in state FIVE | If in state TEN |

|---|---|---|---|

| Nickel | goto state FIVE | goto state TEN | goto state ZERO and dispense/output Coke |

| Dime | goto state TEN | goto state ZERO and output Coke | goto state FIVE and output Coke |

We call tables of this sort machine tables. The table constitutes a certain (very simple) kind of computer program or algorithm. Any mechanical device that implemented the pattern specified in the table would have three different configurations that it could be in, correponding to the three states ZERO, FIVE, and TEN. Implementing the pattern would require linking these states up in such a way that:

Note that we didn’t say anything about what our Coke machine should be built out of. We just talked about how it should work, that is, how its internal states interacted with each other and with input to produce output. Anything which works in the way we specified would count. What stuff it’s made of wouldn’t matter, so far as our design goes. (See Kim p. 132, 144.) It might matter in terms of how big the machine needs to be, or how costly to repair, or how fast it runs. But we’re not worrying about those practical engineering issues.

Relatedly, we said nothing about how the internal states of the Coke machine were constructed. Some Coke machines may be in the ZERO state in virtue of having a LEGO gear in a certain position. Others in virtue of having a current pass through a certain transistor. Others in virtue of having a water balloon be half-full. We understand what it is for the Coke machine to be in these states in terms of how the states interact with each other and with input to produce output. Any system of states which interact in the way we descibed counts as an implementation of our ZERO, FIVE, and TEN states.

So our machine tables show us how to understand a system’s internal states in such a way that they can be implemented or realized via different physical mechanisms. This point is put by saying that the internal states described in our machine tables are multiply realizable.

Computers are just very sophisticated versions of our Coke machine. They have a large number of internal states. Programming the computer involves linking these internal states up to each other and to the outside of the machine so that, when you put some input into the machine, the internal states change in predictable ways, and sometimes those changes cause the computer to produce some output (dispense a Coke).

From a practical engineering perspective, it can make a big difference how a particular program gets physically implemented. But from the kind of design perspective we were taking, the underlying hardware doesn’t matter. Only the software (machine table) does. That is, it only matters that there be some hardware with some internal states that causally interact with each other, and with input and output, in the way our software specifies. Any hardware that does this will count as an implementation or realization of our machine table.

Some links for entertainment or further reading:

The functionalist about the mind thinks that all there is to being intelligent, really having thoughts and other mental states, is implementing a complicated program. In a slogan, our brain is the hardware and our minds are the software. (As Block puts it, “The mind is the software of the brain.”) In humans, this software is implemented by human brains, but it could also be implemented on other hardware, like a squid brain, or whatever nervous system Europan aliens have, or a digital computer.

And if it were, then the squid, Europan, and computer would have real thoughts and mental states, too. So long as there’s some hardware with some internal states that stand in the right causal relations to each other and to input and output, you’ve got a mind. The desire to make coffee would be implemented by certain neurons firing in our brains, but differently in aliens with differently organized nervous systems, and differently yet again inside the computer. What these systems have in common is that they all implement the same pattern of N states, that interact with each other, with inputs and with outputs, in the way described by a single machine table program.

The functionalist does not claim that running just any kind of computer program suffices for mentality. It has to be the right kind of program. Running our Coke machine program doesn’t make something intelligent. Nor does running an ELIZA-style chatbot. Running the same “program” that the neurons in human brains run would make something intelligent.

Here is Lycan in support of functionalism (p. 130):

What matters to mentality is not the stuff of which one is made, but the complex way in which that stuff is organized. If after years of close friendship we were to open Harry [who has a computer brain] up and find that he is stuffed with microelectronic gadgets instead of protoplasm, we would be taken aback — no question. But our Gestalt clash on this occasion would do nothing at all to show that Harry does not have his own rich inner qualitative life.

In the 1930s philosophers and logicians were trying to better understand what it is for a question to be answerable in a purely mechanical or automatic way. We call this effectively calculating an answer to the question. The answer should be derived by a procedure whose steps are straightforward and explicit and unambiguous. They should tell you at each step exactly what to do next. They should require no external information or ingenuity to execute. This is the kind of procedure you might program an unintelligent machine to perform. The procedure should be guaranteed to eventually (after some finite but maybe not limited-in-advance duration) deliver correct answers.

Theorists who contributed to this work include Gödel, Church, Rosser, Kleene, and especially Alan Turing, the same guy who proposed the Turing Test. We’ll hear more about Turing’s ideas in a moment. Nowadays these issues are studied by logicians, and some mathematicians, philosophers, linguists, and computer scientists.

These theorists work with mathematical models of simple but idealized machines, called formal automata. Different kinds of automata are distinguished by specifying which resources they have access to. You’ve read about Turing Machines, which we’ll discuss shortly. These are one kind of formal automaton, an especially powerful kind. It turns out that any question we know how to effectively determine an answer to, can be answered by a Turing Machine. For theoretical purposes, it’s often interesting to figure out whether weaker kinds of automata would also be able to answer a question. The Coke machine we built above is one such kind of weaker automaton, called a finite automaton.

All of these automata are allowed to take arbitrarily long to calculate their final answer; so long as the answer is correct and they finish in some finite amount of time.

These automata aren’t real phyical computers. They are abstract mathematical blueprints. Some of them are blueprints for machines we can never build, because — in addition to being allowed to take as long as they want to finish — they also need arbitrarily long memory tapes. Others we can build, but they wouldn’t be very fast or efficient. Theorists who work with these formal automata aren’t using them to figure out how to build new laptops. It’s better to think of the automata as recipes or algorithms specifying strategies for answering a question. Theorists are using them to figure out which questions can be answered by which strategies.

Look back at our Coke machine table. Suppose such a system were running, and we wanted to take a snapshot of it, so that we could pause it, take it apart, and put it back together later in such a way that it started up again right where it left off. What information would we need to keep track of? In our Coke machine, this is very simple. All we need to keep track of is which state (ZERO, FIVE, or TEN) the automaton was in when we paused it. In other words, which column in our machine table was the active one, that determined what would happen next when we got more input.

Finite automata like our Coke machine can model any computer that has a fixed-in-advance amount of memory. My laptop is such a computer. I might upgrade the amount of RAM it has, but there’s only so many different possible memory locations it knows how to access (264 bytes). I might upgrade its SSD storage device, but this has limits too. So there is some maximum amount of storage my laptop can make use of. Each way that all that storage can be configured gives us a different possible snapshot for my laptop. This is an enormous number of possibilities, but it’s finite. So there is a table like our Coke machine table — only with many many more columns, one for each possible snapshot — that represents how my laptop works. Anything my laptop can be programmed to do, can also be represented in such a machine table.

There are problems that you can give my laptop that it wouldn’t be able to solve. Some of them might not be solvable at all. But some of them might have been solvable, if only the laptop had more storage/memory. So my laptop can’t solve them, but a machine that worked by the same principles and had more possible states could. For some theoretical purposes, it’s useful to think about automata that don’t have limits to how much memory they’ll make use of. Anytime such an automaton runs, it would only access some finite amount of memory. But we don’t limit in advance how much memory it’s allowed to use. These automata have both a set of fixed states, arranged in a machine table that describes which state leads to which next state, and also an auxiliary memory tape that’s “unbounded” or can be arbitrarily long. Instead of accepting nickels and dispensing Cokes, they have instructions for reading and writing symbols to their memory tape.

One simple such machine is called a pushdown automaton. Their memory tape is a stack, and they can only push new symbols onto, and pop them off of, the top of the stack. Turing Machines are more complex. They have a read/write head that can move back and forth to arbitrary locations on their memory tape. Sometimes their tape is described as unlimited in both directions; other times as unlimited in just one. It turns out to make no difference to their mathematical power which of those options we choose, so let’s imagine their tape as unlimited in just one direction.

If we take a snapshot of these automata, what information would we need to keep track of? We’d still need to keep track of which column in our machine table was the active one, that determines what happens next. But we’d also need to keep track of what symbols are currently stored on their auxiliary memory tape. In the pushdown automaton, that would be enough; because the automaton only has access to the “top” of their tape. In the Turing Machine, we’d also have to keep track of where the read/write head is positioned on the tape.

For mathematical purposes, these various automata (and others) are importantly different. But do those differences matter in this class? People sometimes summarize functionalism as saying that our minds are Turing Machines. But it’s something of a historical accident that discussions in philosophy of mind have focused so often on those automata in particular. I think it just adds unnecessary and unhelpful complexity for us to think about Turing Machines. It raises questions like Where are our infinite memories? And when are we talking about “states” of the machine in the sense of columns in the machine table, and when about different possible snapshots the system could be in? There are only a fixed-in-advance number of the former, but for Turing Machines (and pushdown automata) an unlimited number of the latter.

In philosophy of mind, every lesson we can learn from Turing Machines can also be learned from the simpler finite automata, like our Coke machine. So I propose we focus on those instead. I’ll only sometimes make side comments about Turing Machines.

If you want to read more about Turing Machines, here are some links:

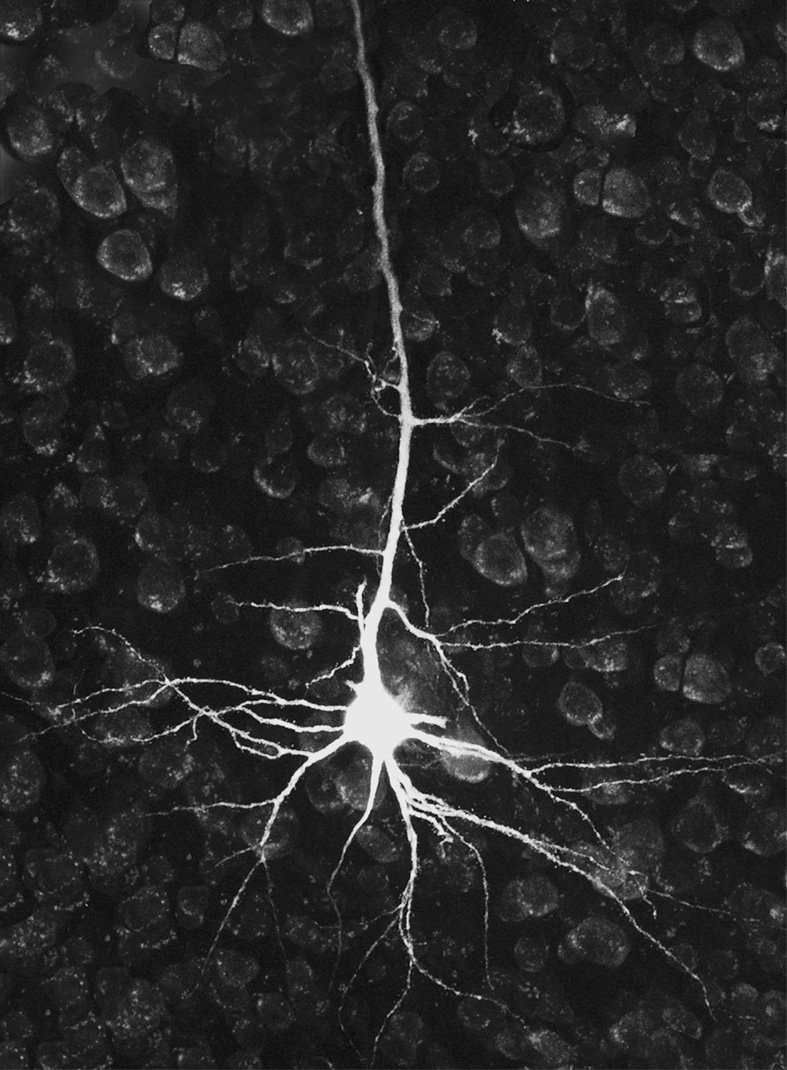

Our brains are devices that receive complex inputs from the sensory organs and sends complex outputs to the motor system. The brain’s activity is well-behaved enough to be specifiable in terms of various (incredibly complicated) causal relationships, just like our Coke machine. We don’t yet know what those causal relationships are. But we know enough to be confident that they exist. So long as they exist, we know that they could be spelled out in some (incredibly complicated) machine table.

This machine table describes a computer program which as a matter of fact is implemented by your brain. But it could also be implemented by other sorts of hardware.

In the Lycan reading for this week, we hear about Henrietta, who has her neurons replaced one-by-one with synthetic digital substitutes. Eventually her brain has no more organic parts left. If the substitutes do the same causal work that the neurons they’re replacing did, then from the outside, we won’t see any difference in Henrietta. She’d keep walking and talking, processing information and making plans, the same as she always did. Lycan argues that Henrietta herself wouldn’t notice any difference either. When she has just one neuron replaced, none of its neighboring neurons “notice” any difference. And over the process of gradually replacing all her neurons, there doesn’t seem to be any point at which she’d lose her ability to think or feel. Her new brains would keep working the same way they always have.

So why should the difference in what they’re physically made of matter? Shouldn’t any hardware that does the same job as her original brain, in terms of causal relations between its internal states, sensory inputs, and outputs to the motor system, have the same mental life as the original?

This perspective is taken up in many places in fiction and film — such as Data in Star Trek, or the Replicants in Blade Runner…

In more limited ways, the characters in The Matrix…

and the Truncat story we read get to acquire certain abilities or memories, or have certain experiences, by loading new programs into their brains.

All of this speaks to the intuitive force of the idea that our mental lives are driven by our brain’s software. The functionalists argue that our actual organic neurophysiological hardware doesn’t matter. Our mental states are like the states of the Coke machine, in that they can be implemented in any hardware that does the right job, that is, that has the right causal patterns.